Hyper-V Gotchas

I’m looking to decommission an older Windows 10 desktop I keep around for testing software. It finally occurred to me I could use my spanking

I’m looking to decommission an older Windows 10 desktop I keep around for testing software. It finally occurred to me I could use my spanking

I was saddened to learn my five or six year old computer couldn’t upgrade to Windows 11. So I bit the bullet and built a

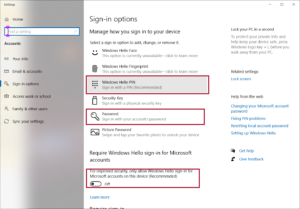

I recently upgraded to the 20H2 (October update) version of Windows 10. It has a number of nice tweaks…and one seriously annoying one. In a

Another day in the Maelstrom of Badly Designed Software, this time involving not just an operating system function but also boot firmware. Geez, if you

So as to keep others from beating their heads bloody working through “problems which shouldn’t be problems”, here’s an important, but little known, fact about

I’ve owned a Cisco Small Business router, model RV-325, for several years, and it’s worked very well as a firewall/router. So well, in fact, that

I bought Barbara an Intel NUC — a tiny (5 inches square, 1.5 inch tall) computer — a couple of years ago. It’s fast, quiet,

I’m a long-time user of the Adobe Creative Suite. So I’m more familiar with Adobe software than I’d like to be…because it is generally insanely

Sometime in the late 90s I decided to learn enough about Linux to wire my house to the internet and run my own email server.

I can’t tell you how much time I’ve spent twiddling my thumbs over the years as various versions of Windows let me know that they’re